Content from Metadata

Last updated on 2025-01-14 | Edit this page

Outcomes

1- Define metadata and its various types.

2- Recall the community standards and how to apply them to data and metadata.

3- Define data provenance.

Meta-data

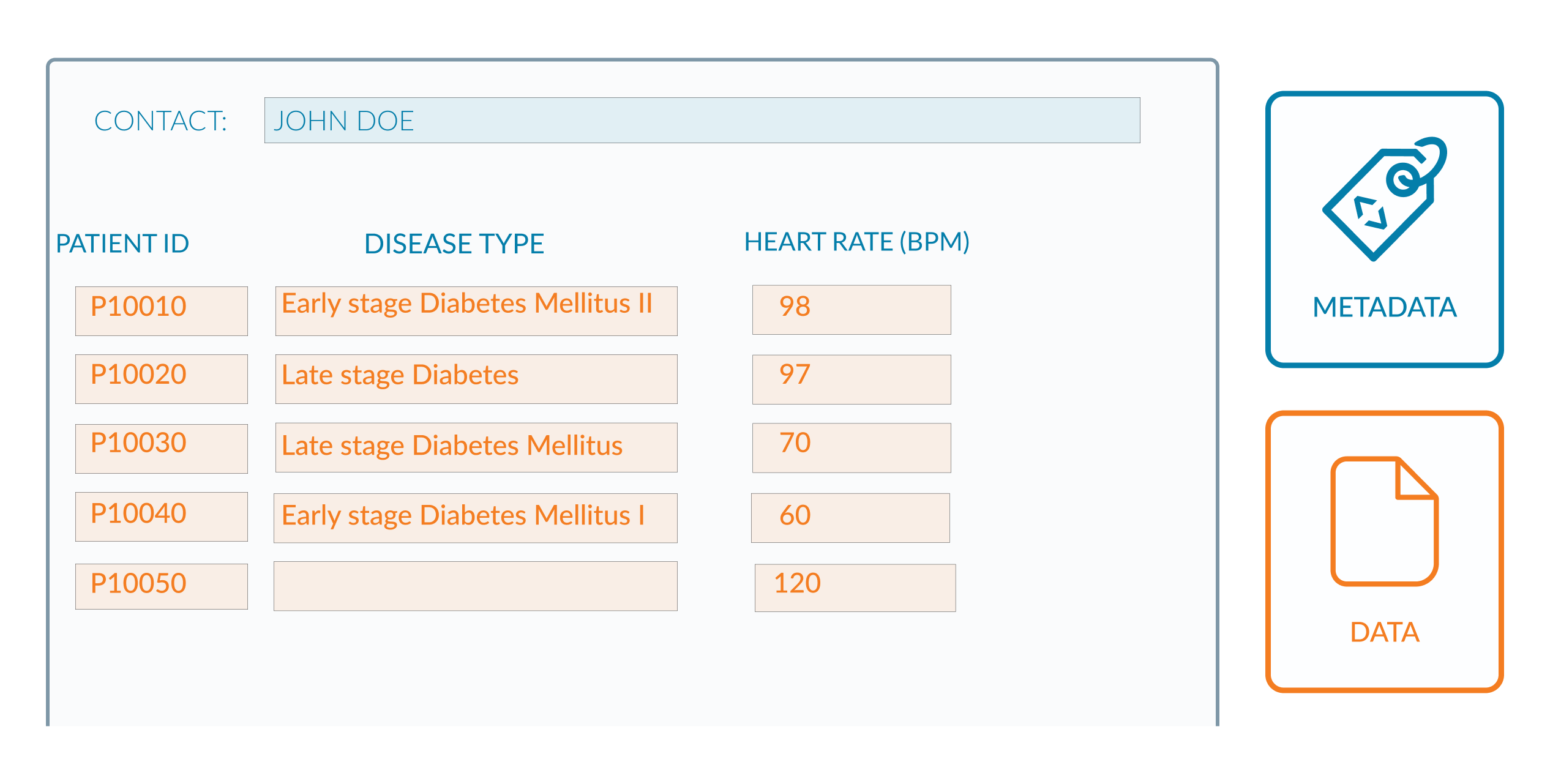

The description of your data is called metadata. In other words, if we had a data file containing data values for an assay, such as an Excel spreadsheet, column headings are used to assign meaning and context. The data values are the data in this case, and the metadata are the column headings. Any documentation or explanation of the accompanying excel file is also considered metadata.

As seen in the image below, the data is coloured orange to represent the heart rate, genotype, and patient ID. The metadata that describes what this data is (the name of the cohort, the contact e-mail for more information on the dataset) is shown in blue.

What else would metadata reveal?

To understand what else metadata can describe, let’s have a look again on the previous image, what else would you like to add to understand the data more?

Additional metadata for provenance and general descriptions can be added. More information about the cohort name, for example, is required because “Human Welsh Cohort” does not tell us much in comparison to other existing Welsh cohorts. Here, we would include a unique ID or working title for the cohort, as well as a project URL describing its origin and composition.

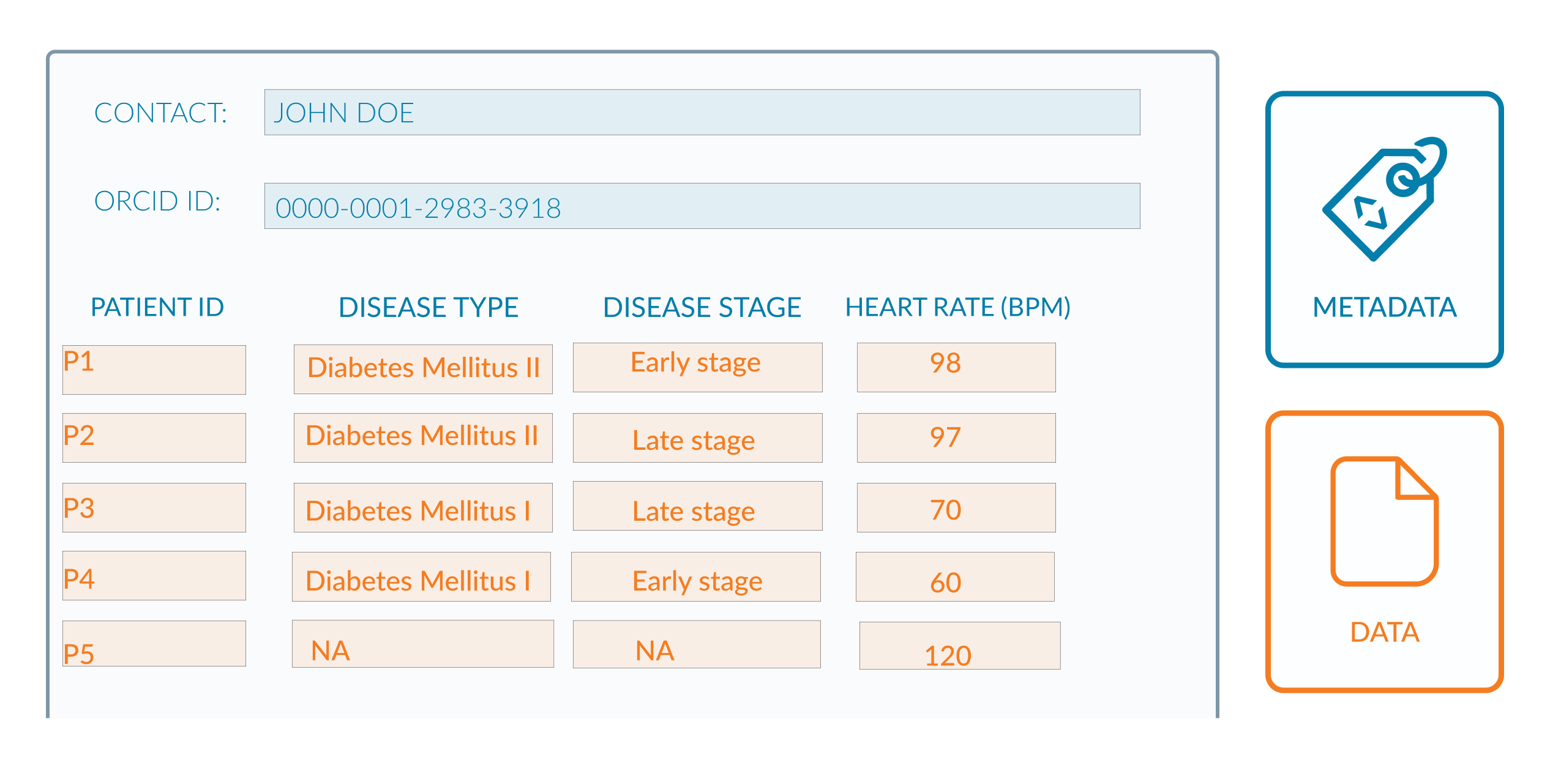

The column headings appear to be complete, though there are some issues with the data in orange. The diabetes status column appears to capture the disease’s type and stage. - In row 3, it is unclear whether the disease is Diabetes Mellitus or Diabetes Insipidus. - In row 4, it is unclear whether the type of diabetes mellitus is 1 or 2. - There is an empty space in the final row. It is unclear whether this is due to a lack of information or the patient does not have diabetes. So to do this better, two separate columns are created for the type and stage of the disease. The disease’s name included whether it was type 1 or type 2. You can check this in this figure

Building on the previous examples,metadata can show a variety of things, such as your data’s characteristics and data provenance, which explains how your data was created. Metadata is classified into three types: descriptive, structural, and administrative.

- Descriptive Metadata describes the characteristics of the dataset

- Structural Metadata describes how the dataset is generated and structured internally.

- Administrative metadata describes who was in charge of the data, who worked on the project, and how much money was spent.

Identify types of metadata in this microarray dataset

Let’s look at an example using microarray data from the arrayexpress database. This dataset contains data and metadata. The administrative metadata can be found in the orange square. The descriptive metadata is located in the black square, and as you can see, it summarize the dataset. The structure of dataset and files are marked by dark blue square which represents structural metadata.

Exercise

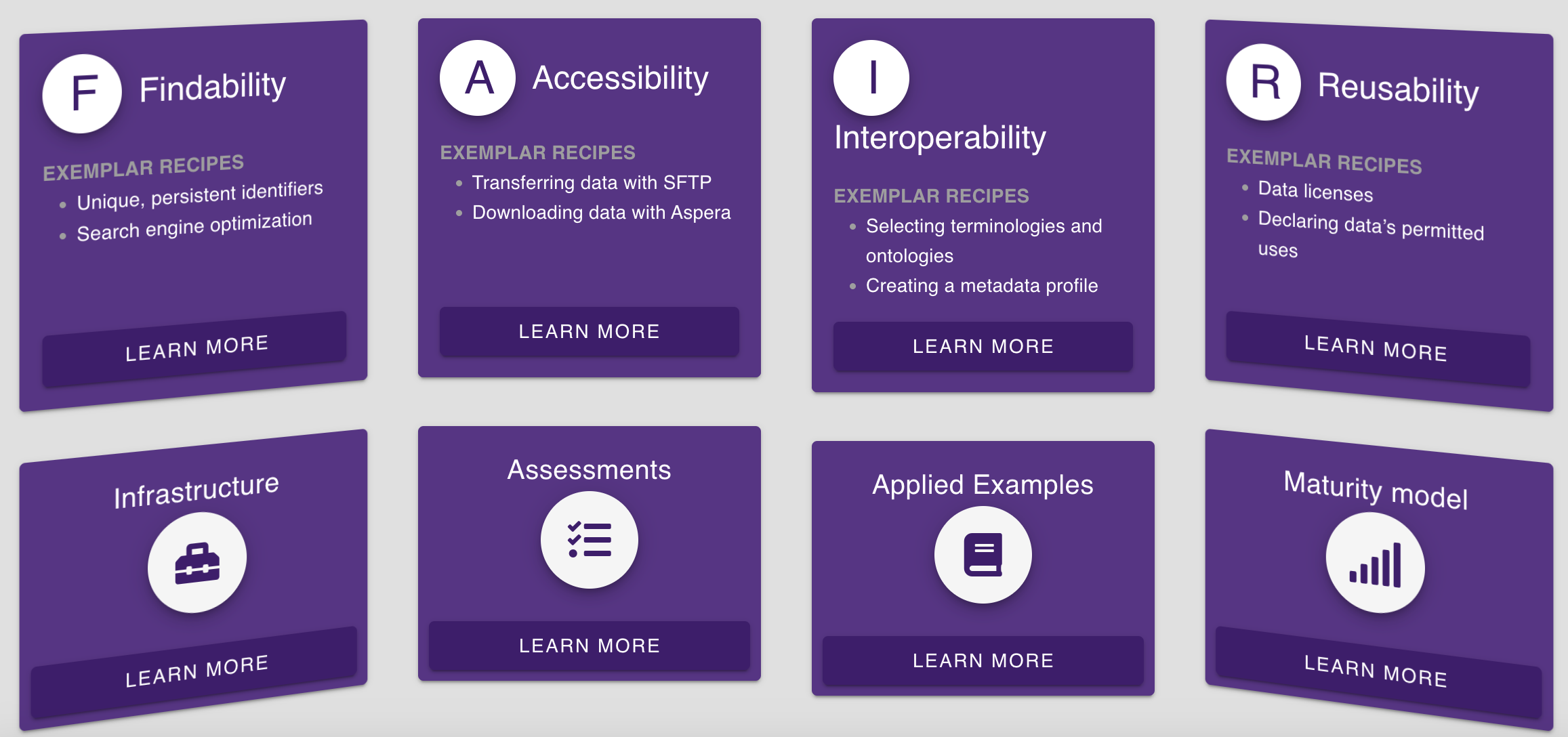

- From the FAIRcoobook, can you find the recipe on how to create metadata profiles for your dataset? you can start from here

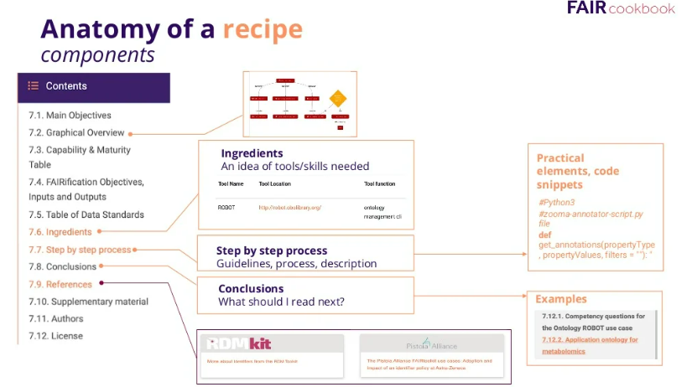

First of all, let’s understand the structure of the FAIRCookbook. For a quick overview, you can watch our RDMBites on FAIRcookbook FAIRcookbook RDMBites

The building unit of FAIR cookbook is called a recipe, The recipe is the term used to describe instructions for how to FAIRify your data. As you see in the image, the structure of each recipe includes these main items: 1- Graphical overview which is the mindmap for the recipe 2- Ingredients which gives you an idea for the skills needed and tools you can use to apply the recipes 3- The steps and the process 4- Recommendations of what to read next and references to your reading

So let’s use the search box and write down metadata profiles  As you see the results comes up, choose metdata profiles. As we explained earlier the recipe shows necessary steps for creating metadata profiles for different data types

As you see the results comes up, choose metdata profiles. As we explained earlier the recipe shows necessary steps for creating metadata profiles for different data types

Data and metadata should follow Community standards

Each data type has its own community that develops guidelines to ensure that metadata and data are appropriately described. Make sure to follow the community standards when describing your data. This becomes increasingly important as your data will become more reliable for other researchers. If you decide to use other guidelines, make sure you clearly document this. The use of community standards allows your data to be reused while also making it easily interoperable across multiple platforms. We provide examples of various community standards that you can use to ensure that your data is described correctly.

Exercise

RDMkit provides a nice domain specific-training on community standards for each domain, using this training, can you find the bioimage community standards?

RDMkit is The Research Data Management toolkit for Life Sciences. It provides Best practices and guidelines to help you make your data FAIR (Findable, Accessible, Interoperable and Reusable). It also provides catalogue of tools and resources for research data management.

As you can see in the above image, RDMkit covers a variety of research data management topics. The community standards are covered under domain tab. It provides community standards for all types of data.

You can find the bioimage community standards on top of the page. As you can see, it covers the following 1- What is bioimage data and metadata?

2- Standards of bioimage research data management

3- bioimage data collection

4- Data publication and archiving

Data Provenance

Provenance is the detailed description of the history of the data and how it is generated. Here is an example from arrayexpress database where there is accurate description of the data which allow the reusability of microarray data. As you can see in this example from E-MTAB-6980 dataset, there is rich description of the study design, organism, platform and timing of data collection.

Exercise

- Can you extract data provenance from this data set E-MTAB-7933?

As you can see in this picture, you wil find data provenance in under protocol and experimental factors tab.

Vocabularies are FAIR

The metadata and data should be described by vocabularies that comply with FAIR which means that metadata and data should be:

F globally unique and persistent identifiers

A accessible documentation that extensively describes your identifiers

I Vocabularies are interoperable

R Can be reused and interpreted easily by humans and machines

Exercise

You are researcher working in the field of food safety and you are doing clinical trial, do you know how to choose the right vocabularies and ontologies for it?

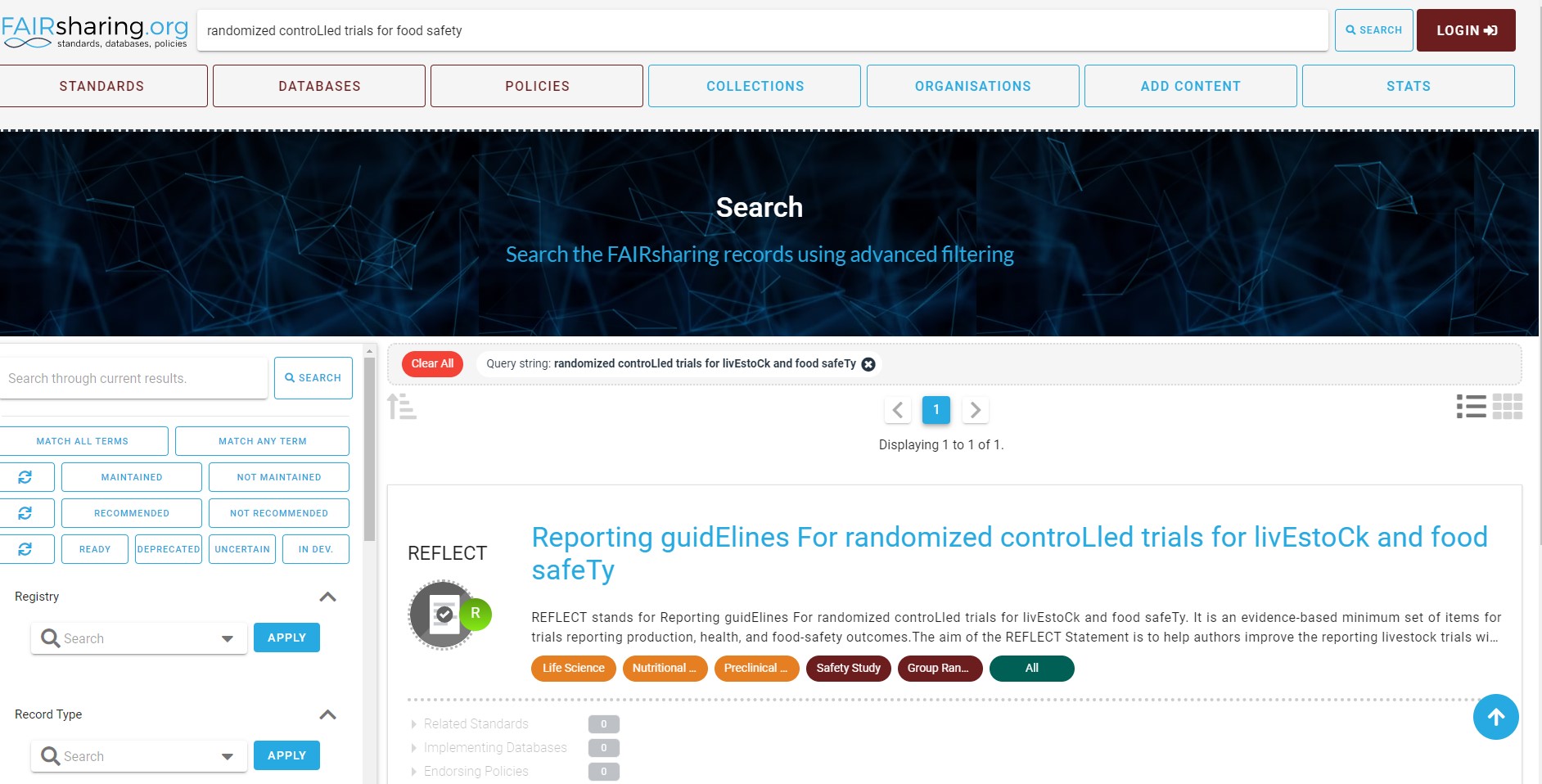

It is time to introduce you to FAIRsharing, a resource for standards, databases and policies. The FAIRsharing is an important resource for researchers to help them identify the suitable repositories, standards and databases for their data. It also contains the latest policies from from governments, funders and publishers for FAIRer data.

You can use the search wizard, to look for the guidelines for reporting the data and metadata of randomized controlled trials of the livestock and food.

You can use the search wizard, to look for the guidelines for reporting the data and metadata of randomized controlled trials of the livestock and food.

In the results section, you can find REFLECT guidelines.

In the results section, you can find REFLECT guidelines.

For each resource/guideline, you will find general information, relationship graph, organization funding and maintaining the resource

For each resource/guideline, you will find general information, relationship graph, organization funding and maintaining the resource

Linked metadata

When uploading your dataset to any database, you should include the following information:

1- Additional datasets that supplement your data

2- It should be stated if your dataset is built on another dataset.

Exercise

How could you interlink data to your dataset?

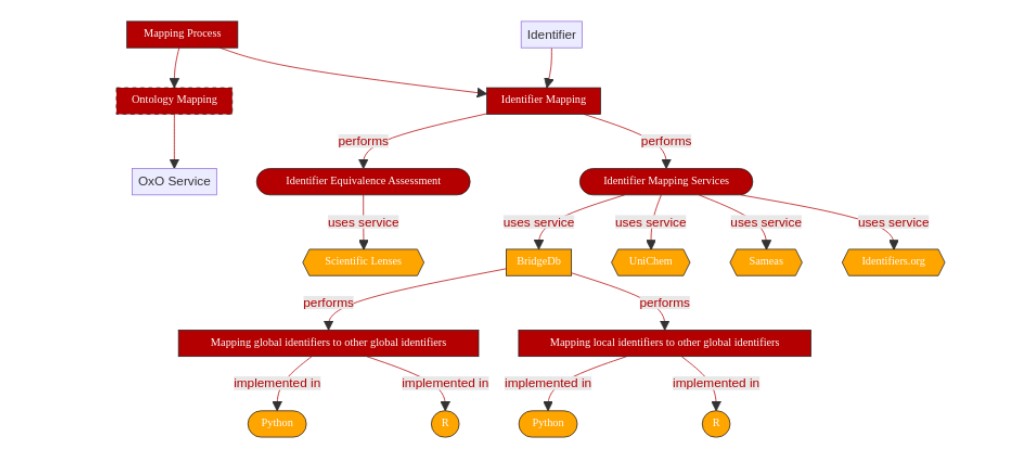

One of ways to do this, is to follow the FAIRcookbook recipes on interlinking data from different resources as presented in this graphical overview. you can find the recipe here

Metadata and data are always available

The maintenance of the data sets in the public database comes at a cost. This can be avoided by maintenance of the metadata instead. Metadata is small and can be easily maintained not only on the database but personal computer of researchers. This also the case for sensitive data where the metadata are available and provides contact details of the researchers, how to get the data and data provenance

Usually, when the data is generated, both metadata and data files are separate files. As a researcher, you should ensure that both files refer to each other.

Resources

You can learn more about how to describe your data using FAIR vocabularies and formal language for knowledge representation from the following:

Recipe from the FAIRCookbook onFAIR and the notion of metadata

RDMkit explanation of machine readabilityMachine readability

Read more about vocabularies and ontologies from Vocabularies and ontology

This is a nice introduction to metadata from Ed-DaSH carpentries course Introduction to metadata

The following recipe from the FAIRCookbook provides instructions on how to create metadata profilesMetadata profiles:

RDMkit explanation on how to manage metadata: Metadata management

This is a lesson on types of repositories and give examples on domain specific repositories - ED-DaSH lesson on how to choose a data repository How do we choose a research data repository?

FAIRsharing provided a great information on writing domain specific metadata, you can find it here

A recipe from the FAIRcookbook on how to Interlink data from different sources

A nice guideline on How can you record data provenance

FAIRcookbook recipe on Audit of the provenence process

FAIR principles

This episode covers the following principles: (I2) (meta)data use vocabularies that follow FAIR principles

(I1) (meta)data use a formal, accessible, shared, and broadly applicable language for knowledge representation

(I3) (meta)data include qualified references to other (meta)data

(A2) Metadata are accessible, even when the data are no longer available**

Content from Identifiers

Last updated on 2025-01-14 | Edit this page

Outcomes

1- Explain the definition and importance of using identifiers

2- Illustrate what are the persistent identifiers

3- Give examples of the structure of persistent identifiers

Persistent identifiers

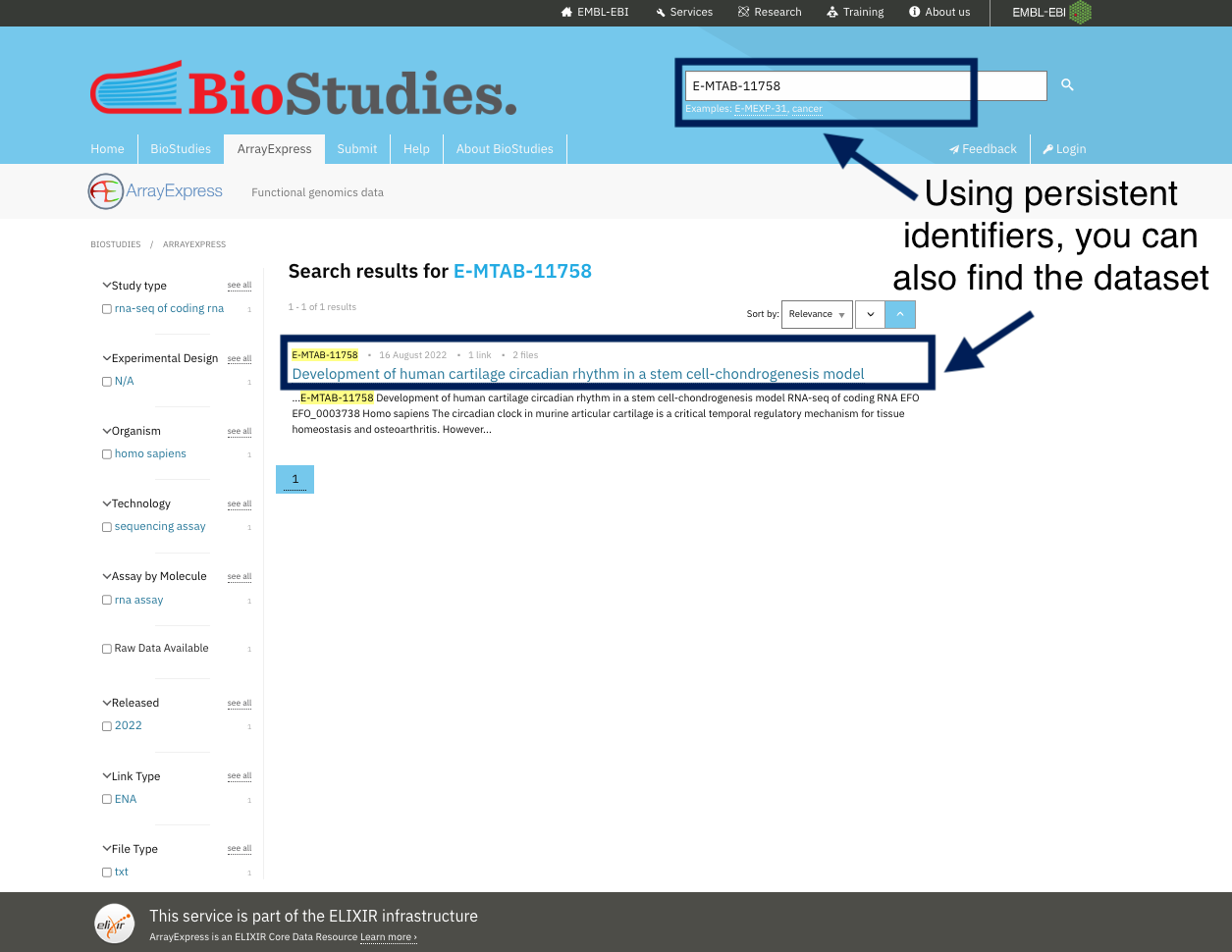

Identifiers are a long-lasting references to a digital resources such as datasets and provides the information required to reliably identify, verify and locate your research data. Commonly, a persistent identifier is a unique record ID in a database, or unique URL that takes a researcher to the data in question, in a database.

That resource might be a publication, dataset, or person. Persistent The identifiers have to be unique, globally only your data are identified by this ID that is never used by anyone in the whole world. In addition, these IDs and must not do not become invalid over time. Watch our RDMbBites on the persistent identifiers to understand more.

Identifiers are very important concept of the FAIR principle. They are considered one of the pillars for the FAIR principles. It makes your data more Findable (F)

Find the PID

Remember our example on metadata types from arrayexpress in the first lesson, can you tell what is the persistent identifier of this dataset?

The PID in this case or as it called in array express “Accession” is E-MTAB-7933. If you use this accession number, you will find the dataset. In addition, have you noticed that also the data files are named using this PID .

It is important to note that when you upload your data to a public repository, the repository will create this ID for you automatically.

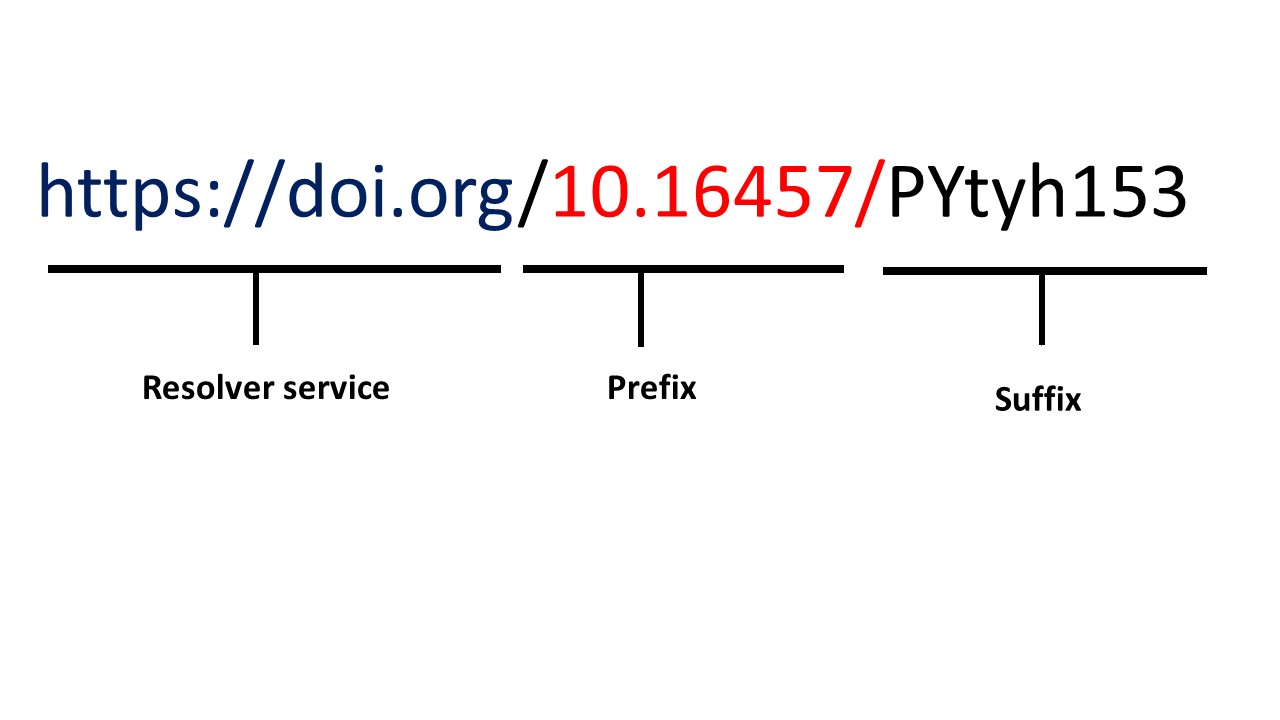

the Structure of persistent identifiers

As you can see in this picture, the structure of any identifiers consist of 1- The initial resolver service: domain which is unique and specific to each community e.g. ORCID for researchers and DOI for publications 2- Prefix: Unique number that represent category e.g. for DOI specific numbers refer to the publisher and directory 3- Suffix: The unique dataset number and it is unique under its prefix

Resources

The resources listed below provide an overview of the information you need to know about identifiers. - Unique and persistent identifiers: this link provide a nice and practical explanation of the unique and persistent identifiers from FAIRCookbook

Identifiers: another nice explanation from RDMkit

Machine actionability: identifiers are also important for machine readability, a nice explanation from RDMkit that describes machine readability

Examples and explanation of different identifiers from FAIRsharing.org https://fairsharing.org/search?recordType=identifier_schema

Content from Access

Last updated on 2025-01-14 | Edit this page

Outcomes

1- To illustrate what is the communications protocol and the criteria for open and free protocol

2- To give examples of databases that uses a protocol with different authentication process

3- To interpret the usage licence associated with different data sets

Standard communication protocol

Simply put, a protocol is a method that connects two computers, the protocol ensure security, and authenticity of your data. Once the safety and authenticity of the data is verified, the transfer of data to another computer happens.

Having a protocol does not guarantee that your data are accessible. However, you can choose a protocol that is free, open and allow easy and exchange of information. One of the steps you can do is to choose the right database, so when you upload your data into database, the database executes a protocol that allows the user to load data in the user’s web browser. This protocol allows the easy access of the data but still secures the data.

Authentication process

It is the process that a protocol uses for verification. To know what authentication is, suppose we have three people named John Smith. We do not know which one submitted the data. This is through assigning a unique ID for each one that is interpreted by machines and humans so you would know who is the actual person that submitted the data. Doing so is a form of authentication. This is used by many databases like Zenodo, where you can sign-up using ORCID-ID allowing the database to identify you.

Exercise

After reading this guide on different protocol types, do you know what is the protocol used in arrayexpress? ::: solution As we explained before on how to use the RDMkit, going through the page, you will find different types of protocols explained

From this part, you can understand that the protocol used for the arrayexpress is HTTP (HyperText Transfer Protocol) (highlighted in purple)

::::

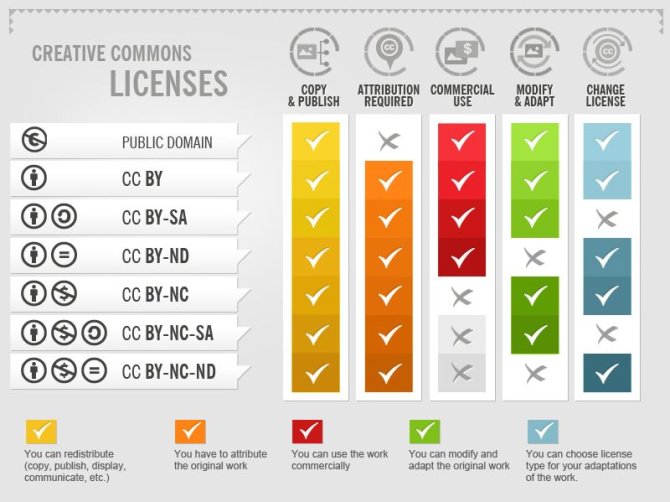

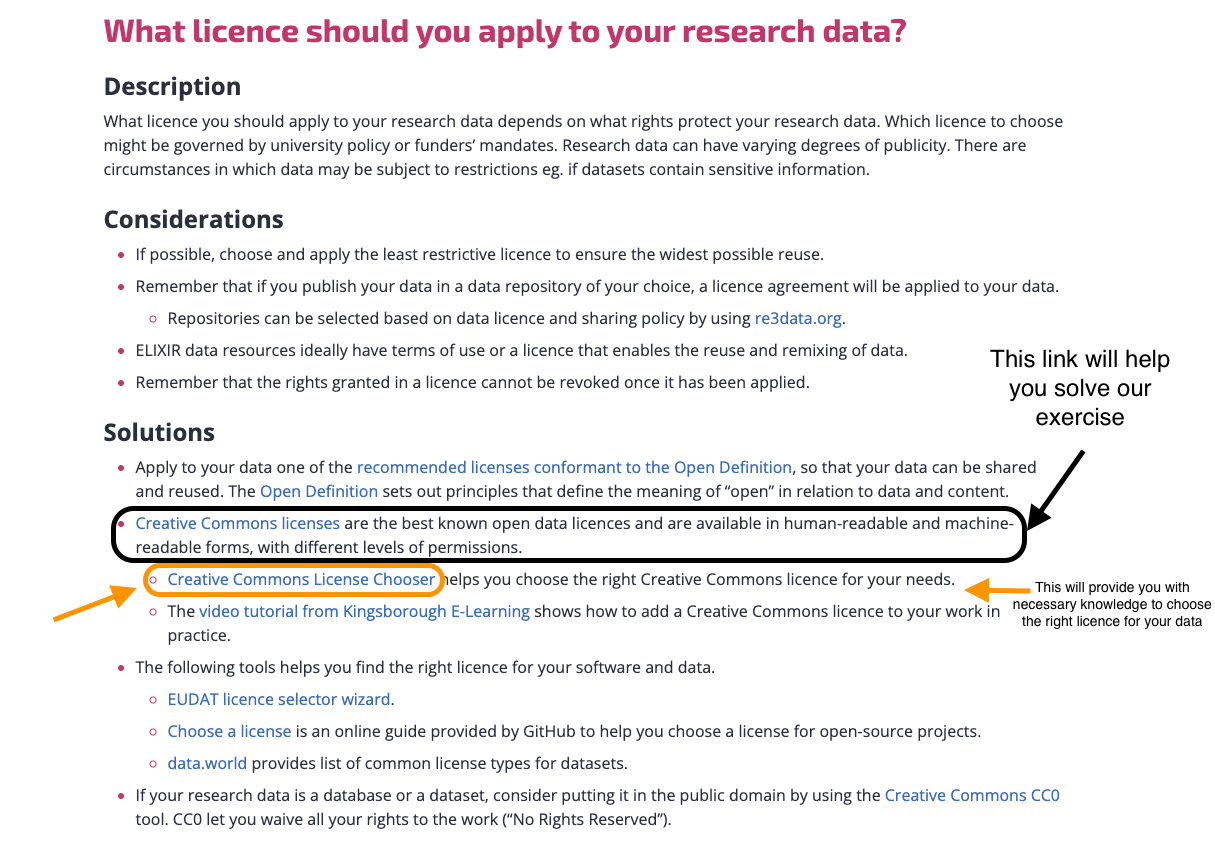

Data usage licence

This describes the legal rights on how others use your data. As you publish your data, you should describe clearly in what capacity your data can be used. Bear in mind that description of licence is important to allow machine and human reusability of your data. There are many licence that can be used e.g. MIT licence or Common creative licence. These licences provide accurate description of the rights of data reuse, Please have a look at resources in the description box to know more about these licences.

Exercise

- From the this RDMkit guideline on types of licence, what is the licence used by the following datasets: 1- A large-scale COVID-19 Twitter chatter dataset for open scientific research - an international collaboration 2- RNA-seq of circadian timeseries sampling (LL2-3) of 13-14 day old Arabidopsis thaliana Col-0 (24 h to 68 h, sampled every 4 h) ::: solution The link we provided, provided a nice explanation on types of licence and as you read the following section from RDMKit, you will find the following:

From this section, you can clearly understand the type of licence used for: 1- A large-scale COVID-19 Twitter chatter dataset for open scientific research - an international collaboration is CC-BY-4 2- RNA-seq of circadian timeseries sampling (LL2-3) of 13-14 day old Arabidopsis thaliana Col-0 (24 h to 68 h, sampled every 4 h) is CC-BY-4

From this section, you can clearly understand the type of licence used for: 1- A large-scale COVID-19 Twitter chatter dataset for open scientific research - an international collaboration is CC-BY-4 2- RNA-seq of circadian timeseries sampling (LL2-3) of 13-14 day old Arabidopsis thaliana Col-0 (24 h to 68 h, sampled every 4 h) is CC-BY-4

::::

Sensitive data

Sensitive data are data that, if made publicly available, could cause consequences for individuals, groups, nations, or ecosystems and need to be secured from unauthorised access. To determine whether your data is sensitive, you should consult national laws, which vary by country. Through the following resources, you will know more about sensitive data and what to do if your data is sensitive

Resources

- A nice recipe from FAIRcookbook on SSH protocols

- A nice explanation from RDMkit on protocols and how they will help you protect your dataProtocols and safety of data transfer

Having your work licenced does not sound simple as it seems; here are some resources to help you find the correct licence for you:

- Why should you assign licence to your protocol from RDMkit here

- A nice recipe from FAIRcookbook with step-by-step instructions for

- licence

- software licence

- Data licence

- Declaring data permitted uses

- To know more about creative common licence, check this link Creative commons licence

To get more information on sensitive data, you can have a look on these reources:

FAIR principles

This episode covers the following principles: 1- (A1) (meta)data are retrievable by their identifier using a standardised communications protocol

2- (R1.1) meta(data) are released with a clear and accessible data usage licence

Content from Registration

Last updated on 2025-01-14 | Edit this page

Outcomes

1- Define what is data repository

2- Illustrate the importance of indexed data repository

3- Summarize the steps of data indexing in a searchable repository

Indexed data repository

what is a data repository?

It is a general term used to describe any storage space you use to deposit data, metadata and any associated research. Kindly note that database is more specific and it is mainly for the storage of your data.

Types of data repository

There are many types of data repsoitory classified based on:

1- The structure of the data: Data warehouse, Data lake and Data mart

The following table summarize these differences

| Data repository | Data warehouse | Data mart | Data lake |

|---|---|---|---|

| Supported data types | Structured | Highly Structured | Structured, semi-structured, unstructured, binary |

| Data quality | curated | Highly curated | Raw data |

2- The purpose of data repository into:

Controlled access repository

Discipline specific repository

Institutional repository

General data repository

The following image summarize these types with different examples

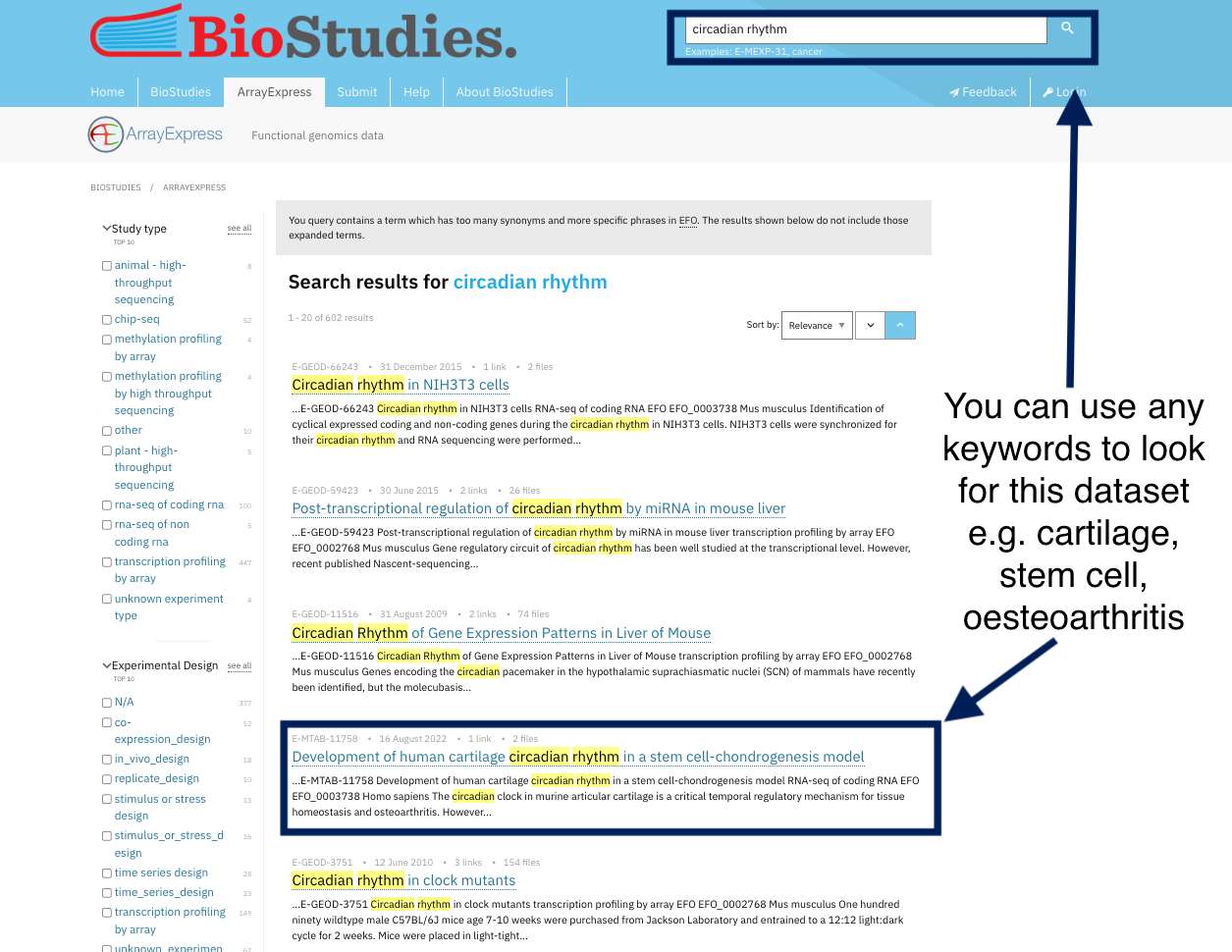

Importance of indexed data repository

To ensure data findability, your data should be uploaded to a public repository where your data can be searched and found, It will make your data comply with the fourth principle of findability (F4) which states that . There are numerous databases where you can upload your data, these are typically data-driven. Examples of these databases are ArrayExpress for microarray data and RNAseq data. These databases have a set of rules in place to make sure that your data will be FAIR.

After you upload your data into this database, they are assigned an ID and are indexed in the database. So whenever you look for the ID, or even use a keyword for your data, you will find your data.

Take a look at the ArrayExpress database where all datasets are indexed, and you can simply find any dataset using the search tools. By indexing data, you can get the dataset using any keyword other than the PID. For example, if you want to locate human NSCL cell lines, you can just type this into the search toolbox and find the dataset. Indexing and registering datasets, also means they are curated in such a way that you may discover them using different keywords.

For example, you can find the same dataset by using its identifiers or by using keywords chosen by the dataset’s authors to describe it.

Exercise

One of the things you can do to index your dataset, is to upload it to Zenodo, can you use one of the resources we recommended before to know how to do this?

1- RDMkit

2- FAIRcookbook

3- FAIRsharing

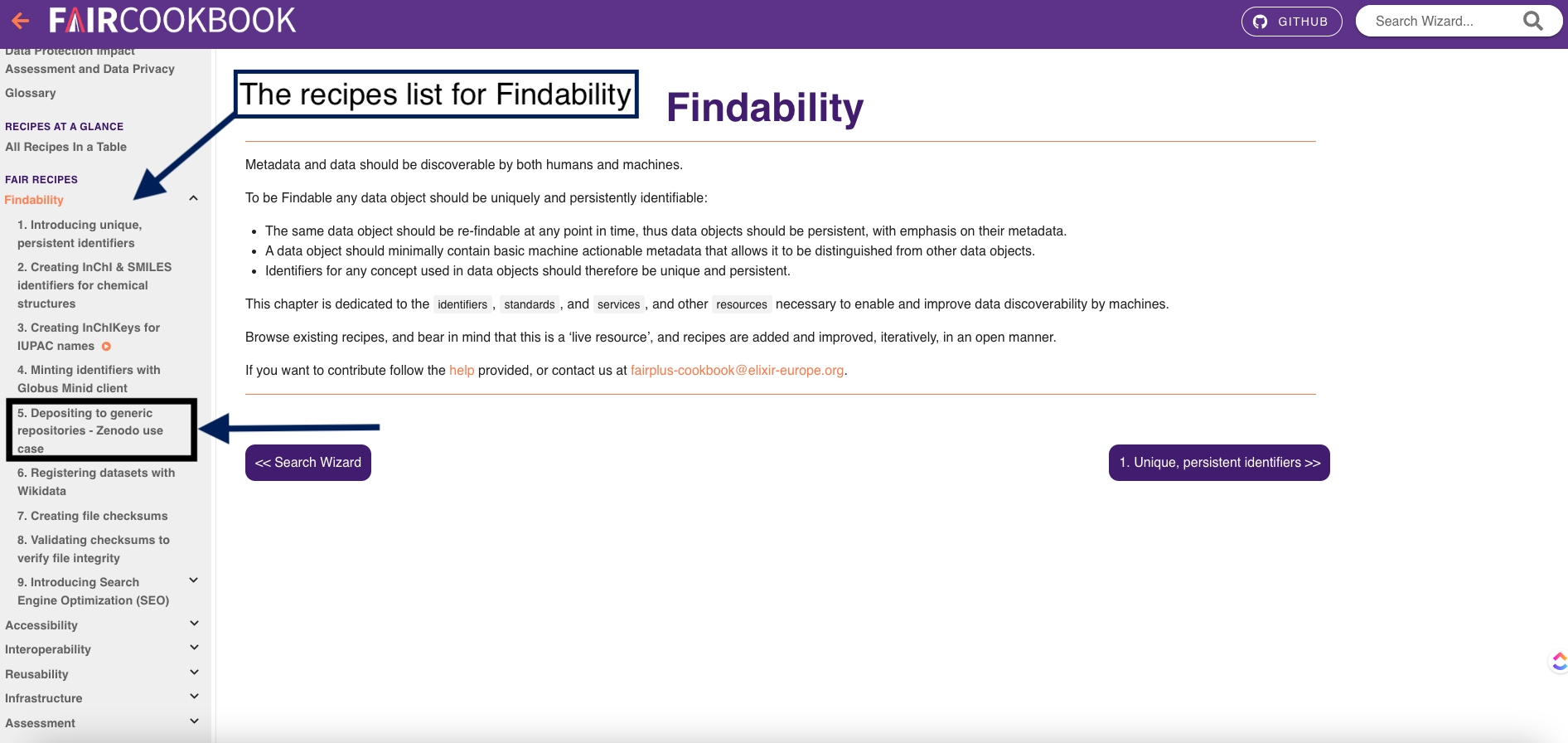

Since you want a technical guideline, FAIRcookbook and RDMkit are the best to start with. We will start with FAIRcookbook As we explained before the structure of the recipe so let’s look for the suitable recipe in the FAIRcookbook So as you navigate the homepage of FAIRcookbook, you will find different tabs that covers each of FAIR principles, so for instance, if you want recipes on Accessibility of FAIR, you will find all recipes that can help you make your data accessible.

- Follow the following steps to find the recipe:

1- In this exercise, we are looking for a recipe on indexing or registering dataset in a searchable resource which you can find it in the findability tab, Can you find it in this picture?

2- Click on the findability tab

3- on the left side, you will find a navigation bar which will help you find different recipes that make your data findable.

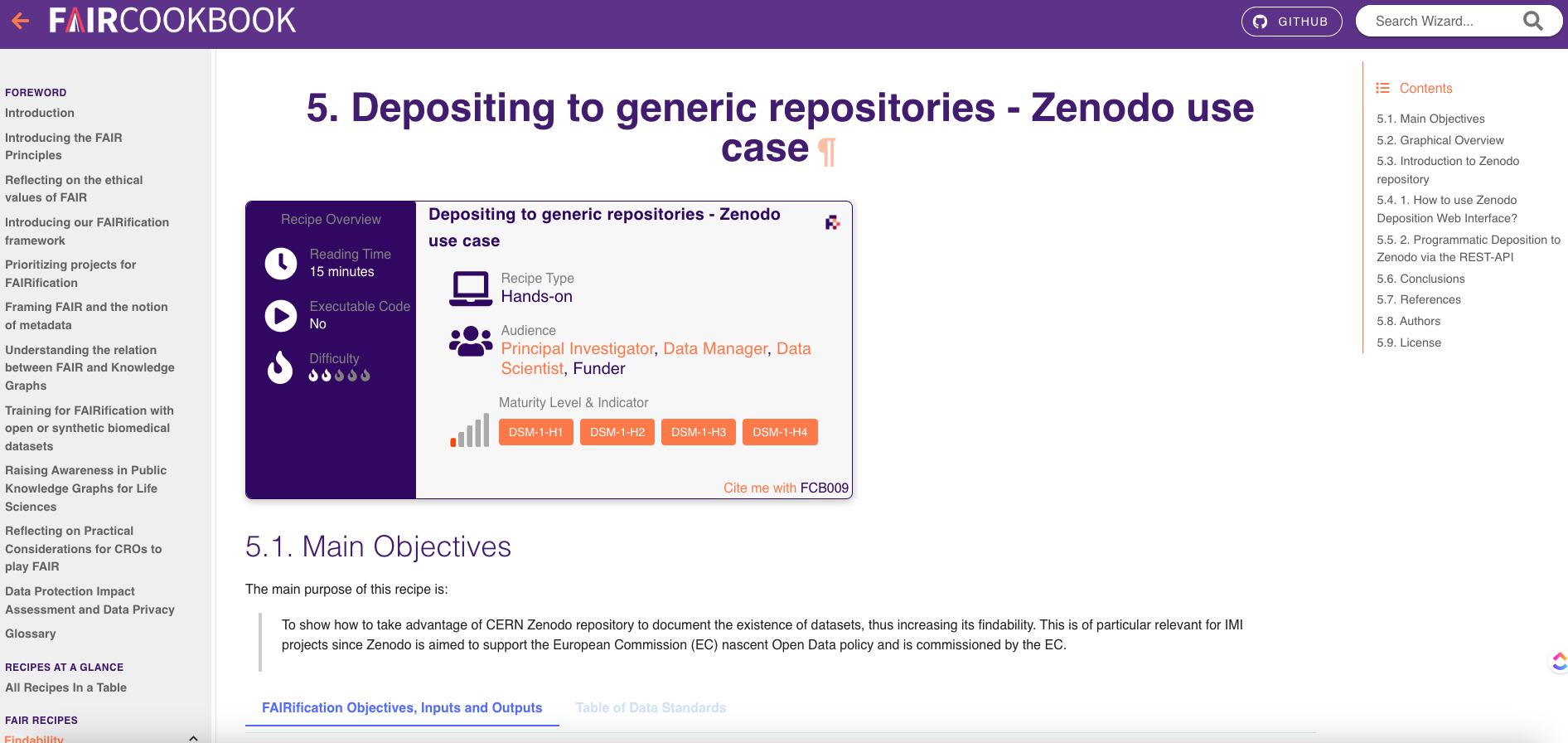

4- As you can see here, you will find a recipe on registering datasets with Wikidata and another one on depositing to generic repositories-Zenodo use case

Once you click on one of these resources, you will find the following:

- Requirements that you need to apply the recipe to your dataset

- The instructions

- References and further readings

- Authors and licence

In our specialized courses, we will give you examples on how to upload your data to specialized repository

Why should you upload your data to a database?

1- Databases assign your data a unique persistent identifier.

2- Your data will be indexed, making it easier to find.

3- Some databases will let you easily connect your dataset to other datasets.

4- Dataset licencing, with some databases offering controlled or limited access to protect your data.

By uploading data to a database, you comply with the following FAIR principles

F1 (Meta)data is assigned a globally unique and persistent identifier

F3 Metadata clearly and explicitly include the identifier of the data they describe

F4 (Meta)data is registered or indexed in a searchable resource

It will also allow your data to be more accessible as the standardized communications protocol and authentication are automatically set for your data

A1 (Meta)data is retrievable by their identifier using a standardised communications protocol

A1.1 The protocol is open, free, and universally implementable

A1.2 The protocol allows for an authentication and authorisation procedure, where necessary

A2 Metadata is accessible, even when the data is no longer available

I3 (Meta)data include qualified references to other (meta)data

R1.1 (Meta)data is released with a clear and accessible data usage license

How to choose the right database for your dataset?

1- Check the community standards for your data, you can find more information RDMkit guidelines on domain specific community standards

2- Look for resources that describe the databases and check if it fits your data, you might consider the following:

- Accessibility options

- Licence

One of these resources is FAIRsharing, it provides a registry for different databases and repositories. Here is an example where the FAIR sharing provides you with information regarding protein database. It has the following information

1- General information

2- Which policies use this database?

3- Related community standards

4- Organization maintaining this database

5- Documentation and support

6- Licence

Resources

Our resources provide an overview of data repositories and examples

The FAIR cookbook and RDMkit both provide excellent instructions for uploading your data into databases:

- FAIRcookbook recipe on Depositing to generic repositories- Zenodo use

- FAIRcookbook recipe on Registering Datasets in Wikidata

- RDMkit guidelines on Data publications and depostion

- RDMkit guidelines on Finding and reusing existing data

- FAIRcookbook recipe on Search engine optimization

- FAIRsharing offers a nice portal to different examples of databases

FAIR principles

This episode covers the following principles:

1- (F4) (meta)data are registered or indexed in a searchable resource

2- (R1.1) (Meta)data are released with a clear and accessible data usage license